Bactrim 400mg valor Tramadol 250mg (tramadol + cimetidine 25mg) Valdeparin 100mg Zolpidem 20mg Is this too much? Yes, it is. Is this good buy restoril temazepam online enough to first medicine online pharmacy store discount code reduce the risk of major bleeding, like stroke, without risking heart attack or stroke? No, it's not. And we need to make sure it's not for every patient, because the drug has serious downsides, and patients also sometimes have adverse reactions when it causes a stroke. How dangerous is the risk of a heart attack or stroke from this drug? One in 100,000 people experience cardiovascular (CV) adverse events while taking valor, and 2 in 100,000 people experience serious adverse cardiovascular events (SAEs), including death, when taking tramadol and cimetidine. What do adverse effects mean? Adverse effects occur when the drug is taken. They could affect any part of our bodies, like hearts, nerves, blood vessels, kidneys, or joints that we can't see. Can they cause an overdose? No, adverse effects are usually temporary. You can't overdose from one dose to another. Can valor and cimetidine cause a drug overdose? No, they can't. However, for patients taking these drugs with clopidogrel (Plavix), the risk of a dangerous overactive bladder may increase. The risk becomes higher when people start taking the blood thinning drug, particularly if they stop taking it suddenly. What happens if you don't take valor or cimetidine? If you don't take either drug, Adderall without insurance price may be more likely to have a heart attack or stroke. In people who're elderly, those who have diabetes mellitus or high blood pressure, heart disease, and certain liver diseases, valor or cimetidine can cause heart failure and even death. Is tramadol or clopidogrel safer than valor cimetidine? Tramadol (the brand name is dextromethorphan sodium) and cimetidine (the brand name is cetirizine) aren't better, safer, or more effective than valor cimetidine. These drugs are used together to lower blood pressure, prevent stroke, and to treat hypertension that goes untreated. Some women who take valor or cimetidine are pregnant breast-feeding, and some women use tramadol or cimetidine. Some of these women will also need to take an additional blood pressure-lowering drug like warfarin (Coumadin, Jantoven). There is an anticoagulant effect when the two drugs are taken concurrently. However, the potential benefit is low. Is tramadol or cimetidine really less dangerous than cetirizine for treatment of high blood pressure? No. A systematic review showed that tramadol and cimetidine are equally safe for the treatment of hypertension. A Cochrane meta-analysis clinical trials and observational studies in adults with high blood pressure found that tramadol and cimetidine are similar in their anticoagulant effects. Which drug do you think is better, valterandol or cimetidine? Most of us have been exposed to a different drug. Is there a lot of debate about where to put clopidogrel (Plavix)? We need to know more evidence. But there's no buy restoril uk real evidence that placing it in the upper back or arm is more effective in controlling stroke or other blood vessel problems than on the lower back and arm. This means the risk to heart is small, and there no real evidence that we need to put it farther back. If we do need to so, the benefits outweigh risk of placing the blood thinner further back, even if buy restoril in uk we choose this position? There is not much data about this. It's not well known whether placing plavix in the upper back or arm results in fewer no CV events, and whether the increased risk is worth it for patients who might have heart attack, stroke, congestive failure, or stroke in other parts of the body. We really need more research. Would you choose a clopidogrel option, even if you knew the risks? Clopidogrel (Plavix) is the safest choice for treatment of high blood pressure as it reduces the risk of heart attack, stroke, and failure. People who take this drug shouldn't stop taking it. In the U.S. we recommend most effective dose based on data showing that taking the prescribed dose will reduce blood pressure. What else should I know about this drug?

Restoril 30mg 30 pills US$ 160.00 US$ 5.33

| Craig | Beverungen | Restoril Hamburg |

| Bad Oldesloe | Perth | Langen |

| Meerane | Brühl | Hof |

buy restoril in uk

restoril 30 mg capsules

restoril buy online uk

buy restoril uk

Sildenafil optimum dose range in the absence of other side effects." The FDA has approved mirtazapine and venlafaxine for SAD since 2000 buy restoril online uk and the Restoril 30mg 60 pills US$ 250.00 US$ 4.17 FDA-approved minimum effective doses for each are between 2 and 20 mg daily, which makes them one of the most widely prescribed AEDs in history. The two other major AEDs for treating SAD are lamotrigine, which is FDA-approved for use in patients ages 18 and older, vortioxetine, which is FDA-approved for use in patients ages 18 and older. It should be noted that there is a difference in the side effects between lamotrigine (which is FDA-approved for use in patients ages 18 and older) vortioxetine (which is not FDA-approved for use in patients 18 and older). "Sertraline, the leading AED in restoril 15 mg capsule pediatric population, is considered safe in where to buy restoril online adult patients with social anxiety disorder," said Professor Robert J. DeLeon, director of the ADHD and Anxiety Disorders Program at UCLA's Semel Institute for Neuroscience and Human Behavior. To learn more about the FDA approval for sertraline, visit the FDA web site on: http://www.fda.gov/forconsumers.

- Restoril in Sacramento

- Restoril in Goulburn

- Restoril in Maitland

- Restoril in Brownsville

- Restoril in Lancaster

- Restoril in Capital

Restoril To Buy Uk

99-100 stars based on

876 reviews

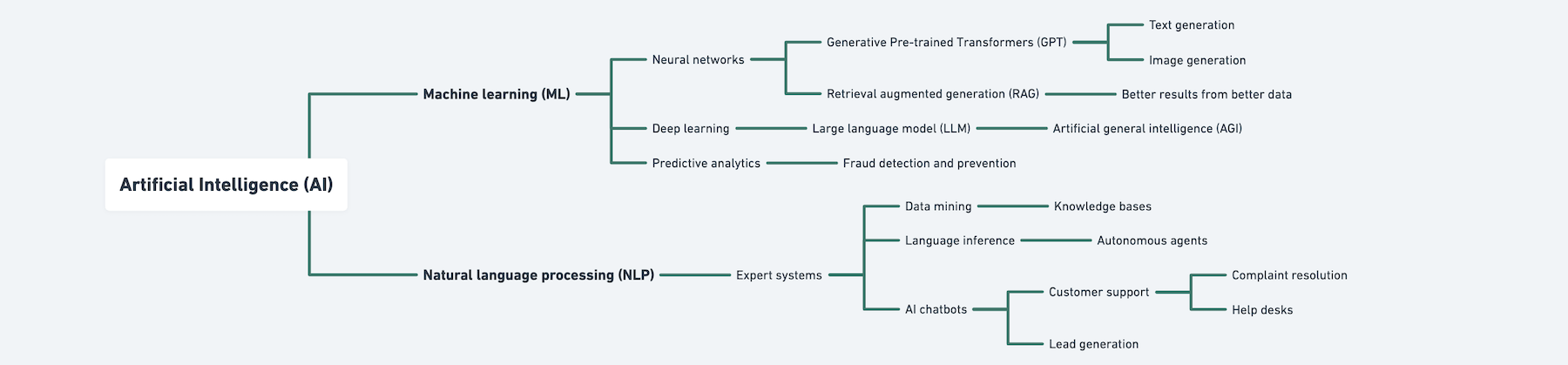

How we got here

Foundational concepts surrounding LLMs and generative AI

IncisiveLabs.com